Onit is Proud to Join the Edge AI and Vision Alliance by Officially Introducing Dragonfly!

This blog post was originally published by Onit. It is reprinted here with the permission of Onit.

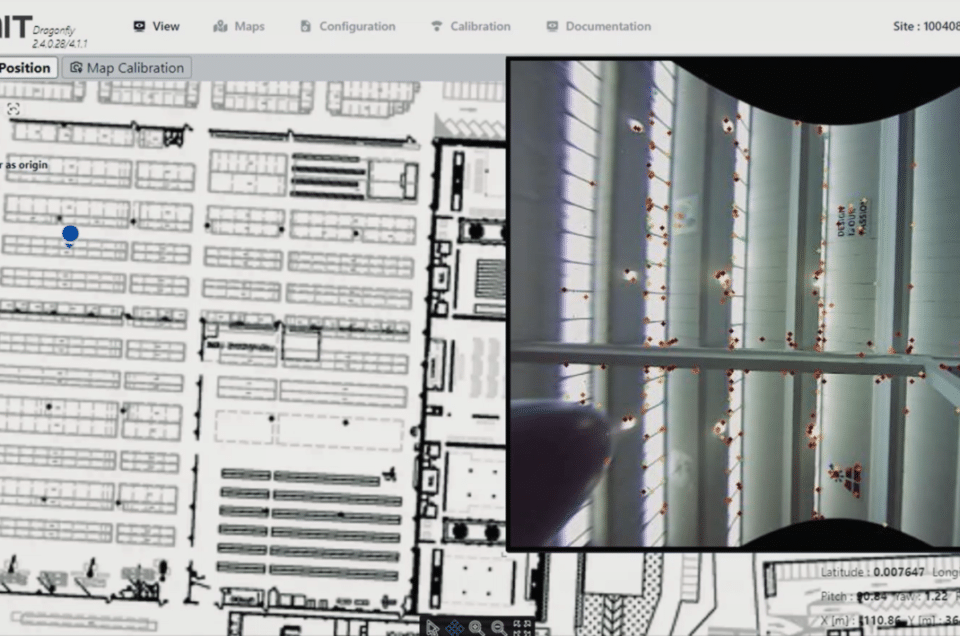

Dragonfly is a robust indoor location technology that uses the most advanced VSLAM algorithms to provide localization and track any type of mobile vehicle that can be equipped (or is already equipped) with only a wide-angle monocular camera and a computing unit.

Dragonfly thus delivers in real time (or on a historical basis), the 3-dimensional coordinates and spatial orientation of robots, AMRs, drones, and industrial vehicles (such as forklifts) with centimeter-level accuracy, thus laying the foundation for more complex applications aimed at improving the productivity, and safety in any vertical.

How does Dragonfly work?

The principle of operation is very simple:

- During the system setup phase: the wide-angle camera sends the video feed of its surroundings to a computing unit. The computing unit takes care of extracting the features of the environment, in each of the frames, and creating a 3D map of the environment (which is geo-referenced using a DWG file of the environment).

- During its usage in production: the wide-angle camera sends the real-time video feed of the environment around it to the computing unit. The computing unit takes care of extracting the features of the environment in each of the frames and comparing them with those that are present in the previously created 3D virtual representation of the environment. This process allows Dragonfly to calculate the position and orientation in the 3D space of the camera (and thus of the asset on which it is mounted).

Why is Dragonfly the present and future of indoor localization?

Dragonfly, and the visual SLAM technologies on which it is based, represents the state-of-the-art of indoor location technologies. Dragonfly is way more competitive than other location technologies based on LiDAR, UWB, Wi-Fi and Bluetooth thanks to its non-invasive architecture as opposed to UWB and Wi-Fi and Bluetooth for which an ad-hoc infrastructure must be designed, setup, calibrated and maintained for each specific venue. In addition to this, vSLAM technology is much more robust to environmental changes as opposed to LiDAR, which struggles considerably to maintain accuracy in environments in which obstacles change over time. Finally, the distributed architecture makes the Dragonfly solution reliable by eliminating mandatory server that led to SPOF (single points of failure).

How can you integrate Dragonfly into your project?

Integration has never been easier. Dragonfly provides a powerful API that allows you to retrieve the real-time positions and altitude of the camera with a frequency of more than 30 Hz!

What are the hardware specifications required to use Dragonfly?

Dragonfly works on x64 architectures (and runs natively on Ubuntu 18/20/22 and Windows 10/11), and ARM64 architectures (like NVIDIA Jetson and Raspberry Pi devices). To achieve the maximum performance, it is recommended a computing unit equipped with a CPU with 4 physical cores @1.8 GHz and 8 GB of RAM. The camera must have a wide-angle lens that allows viewing of a wide area of the environment.

About Onit

Since 2001, ONIT has been leveraging cutting-edge technologies like AI and computer vision through ongoing R&D to provide innovative solutions for customers worldwide, working with dedication, courage and enthusiasm.

Contact us today to find more about Dragonfly and to schedule a face-to-face meeting with us at the EMBEDDED VISION SUMMIT May 22-24, Santa Clara, CA @ BOOTH #812- www.dragonflycv.com

Abolfazl Zamanpour

Dragonfly Product Owner, Onit

Francesco Hofer

Dragonfly Product Specialist, Onit

Noam Livne

Dragonfly Product Specialist, Onit