Program Information

We’re building our 2026 program. See early speakers and sessions!

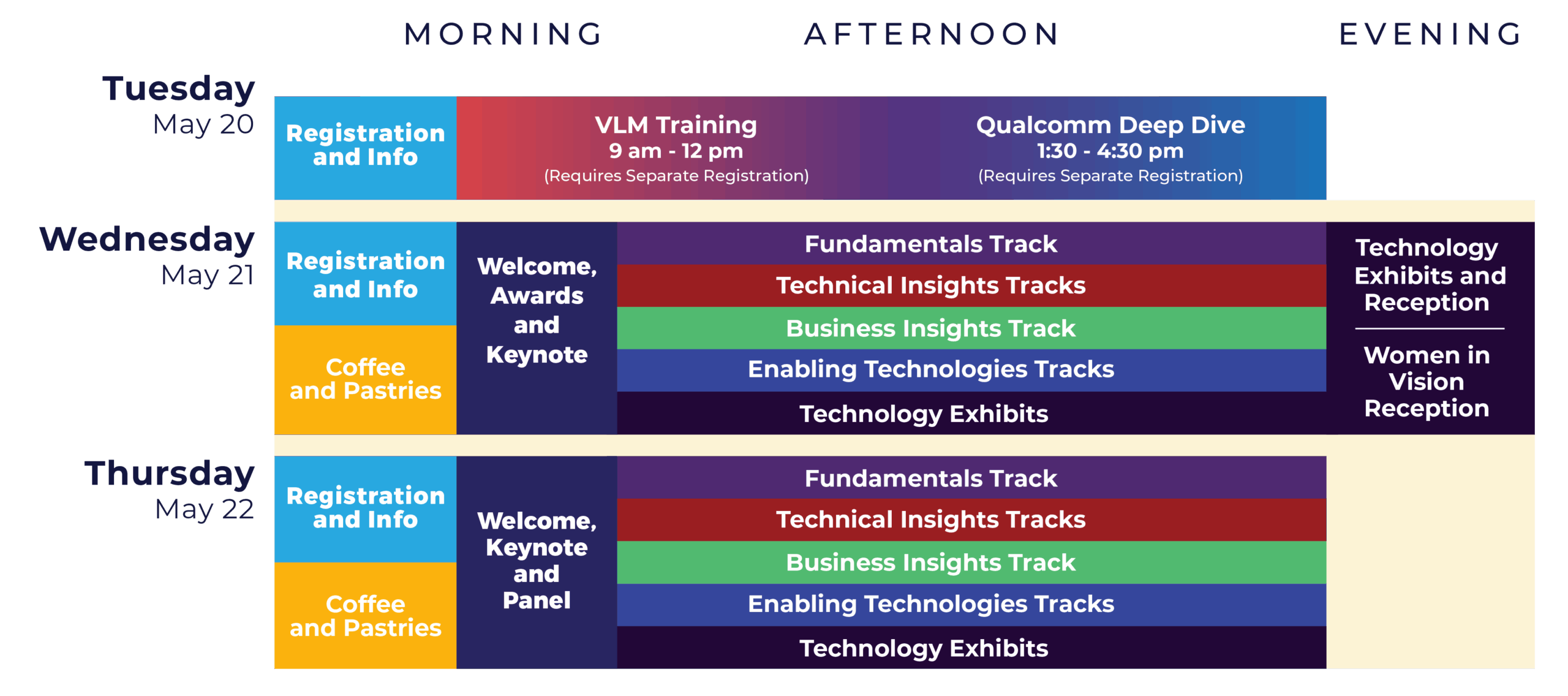

Tuesday, May 20

8:00 am - 5:00 pm:

Registration

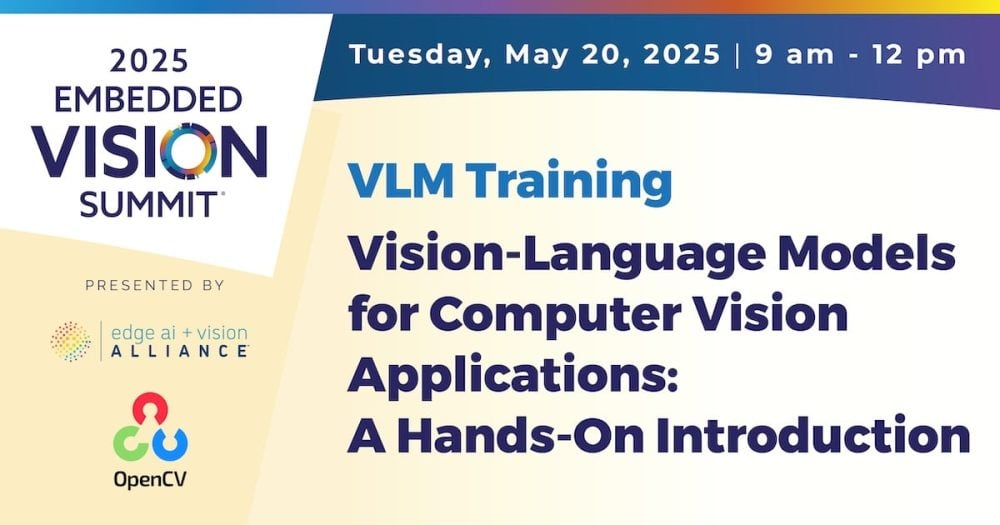

9:00 am - 12:00 pm:

Vision-Language Model Training (separate pass required)

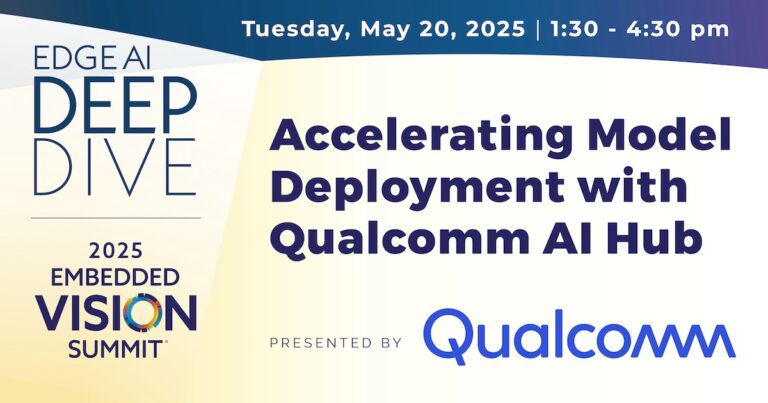

1:30 pm - 4:30 pm:

Qualcomm Deep Dive Session (separate pass required)

Wednesday, May 21

7:30 am - 7 pm:

Registration

9:00 am - 6:00 pm:

Conference tracks

12:30 pm - 7:30 pm:

Technology Exhibits

(Reception from 6:00 pm – 7:30 pm)

Thursday, May 22

7:30 am - 5:00 pm:

Registration

9:00 am - 6:00 pm:

Conference tracks

11:00 am - 5:00 pm:

Technology Exhibits

Sign Up to Get the Latest Updates on the Embedded Vision Summit Program

Learn about the newest speakers, sessions and other noteworthy details about the Summit Program by leaving us a few details.

See you May 11-13, 2026 in Silicon Valley, California

-

-

Sponsors and Exhibitors

Interested in sponsoring or exhibiting?

The Embedded Vision Summit gives you unique access to the best qualified technology buyers you’ll ever meet. -

Get in Touch

Want to contact us?

Use the small blue chat widget in the lower right-hand corner of your screen, or the form linked below.STAY CONNECTED

Follow us on X and LinkedIn.